%global _empty_manifest_terminate_build 0

Name: python-Proxy-List-Scrapper

Version: 0.2.2

Release: 1

Summary: Proxy list scrapper from various websites. They gives the free proxies for temporary use.

License: MIT License

URL: https://pypi.org/project/Proxy-List-Scrapper/

Source0: https://mirrors.nju.edu.cn/pypi/web/packages/be/2e/3bc7b7de8d1372e515bdf8dba313abf91311052cb95d40c2865f636b1c8b/Proxy-List-Scrapper-0.2.2.tar.gz

BuildArch: noarch

%description

# Proxy-List-Scrapper

#### [demo live example using javascript](https://narkhedesam.github.io/Proxy-List-Scrapper)

Proxy List Scrapper from various websites.

They gives the free proxies for temporary use.

### What is a proxy

A proxy is server that acts like a gateway or intermediary between any device and the rest of the internet. A proxy accepts and forwards connection requests, then returns data for those requests. This is the basic definition, which is quite limited, because there are dozens of unique proxy types with their own distinct configurations.

### What are the most popular types of proxies:

Residential proxies, Datacenter proxies, Anonymous proxies, Transparent proxies

### People use proxies to:

Avoid Geo-restrictions, Protect Privacy and Increase Security, Avoid Firewalls and Bans, Automate Online Processes, Use Multiple Accounts and Gather Data

#### Chrome Extension in here

you can download the chrome extension "Free Proxy List Scrapper Chrome Extension" folder and load in the extension.

##### Goto Chrome Extension click here.

## Web_Scrapper Module here

Web Scrapper is proxy web scraper using proxy rotating api https://scrape.do

you can check official documentation from here

You can send request to any webpages with proxy gateway & web api provided by scrape.do

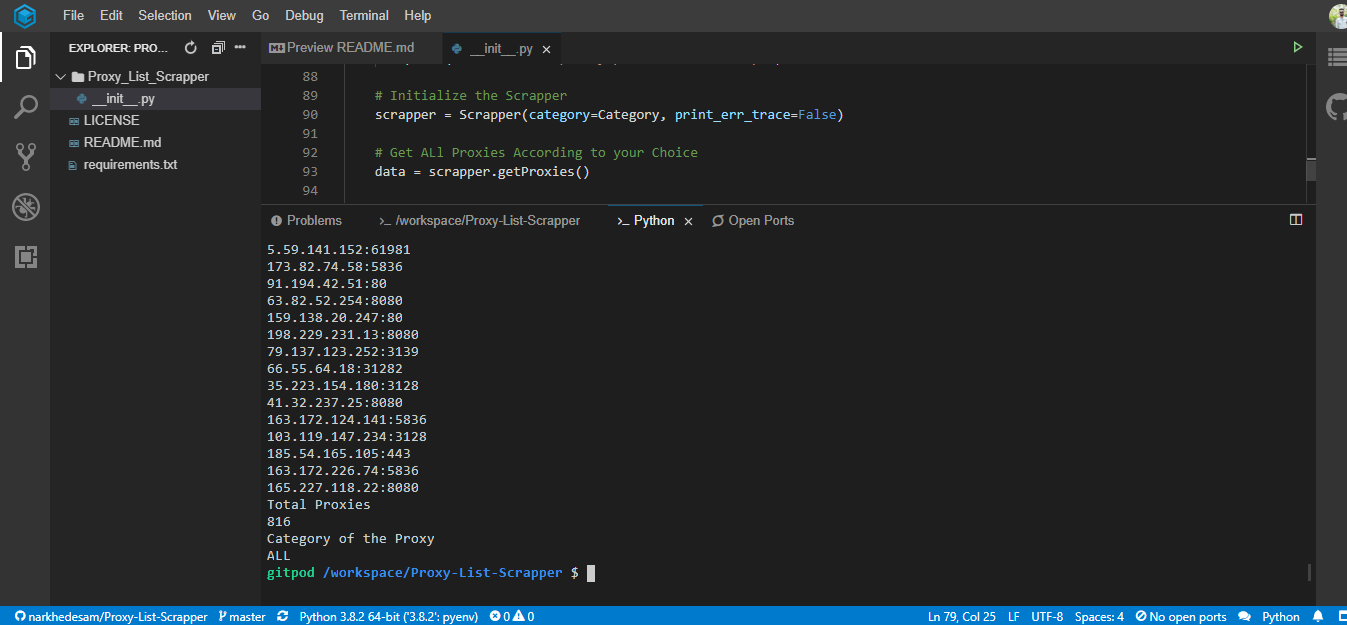

## How to use Proxy List Scrapper

You can clone this project from github. or use

pip install Proxy-List-Scrapper

Make sure you have installed the requests and urllib3 in python

in import add

from Proxy_List_Scrapper import Scrapper, Proxy, ScrapperException

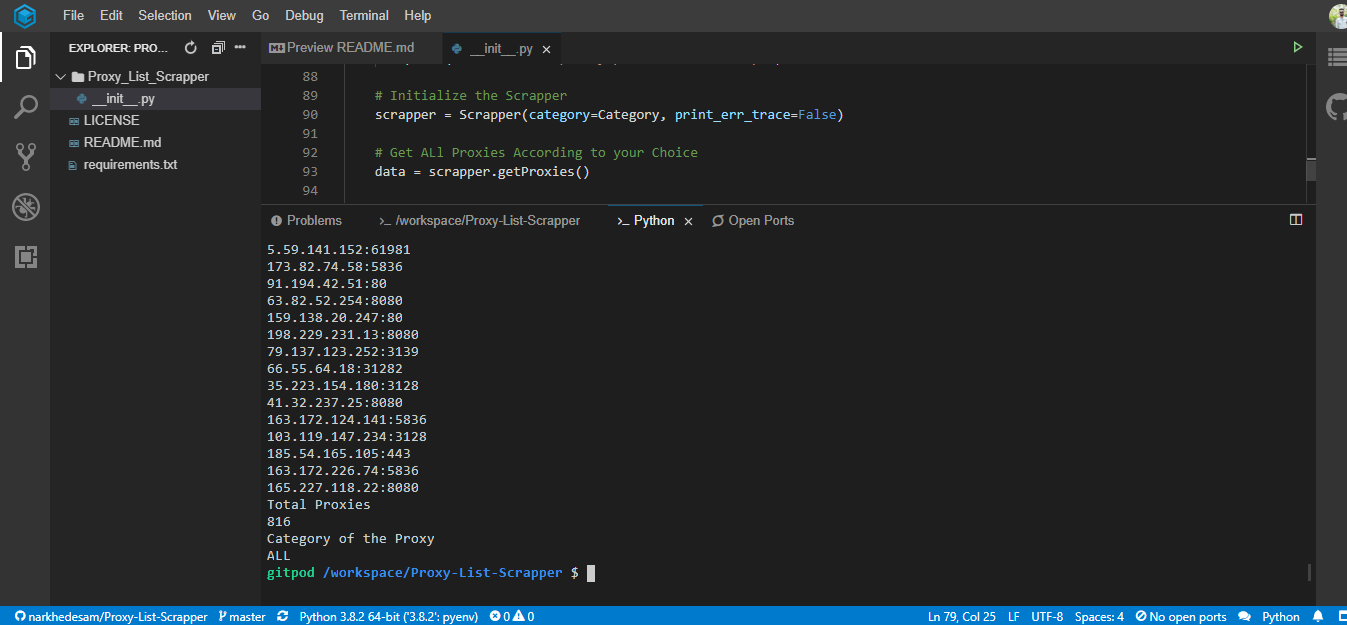

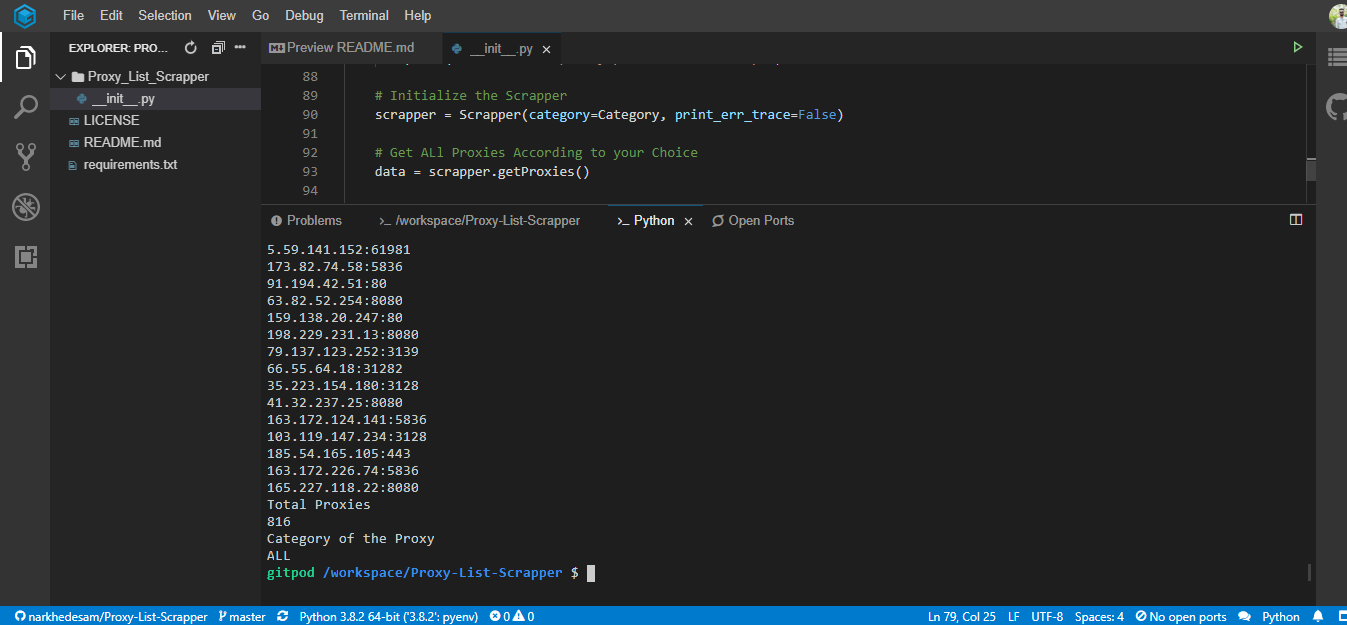

After that simply create an object of Scrapper class as "scrapper"

scrapper = Scrapper(category=Category, print_err_trace=False)

Here Your need to specify category defined as below:

SSL = 'https://www.sslproxies.org/',

GOOGLE = 'https://www.google-proxy.net/',

ANANY = 'https://free-proxy-list.net/anonymous-proxy.html',

UK = 'https://free-proxy-list.net/uk-proxy.html',

US = 'https://www.us-proxy.org/',

NEW = 'https://free-proxy-list.net/',

SPYS_ME = 'http://spys.me/proxy.txt',

PROXYSCRAPE = 'https://api.proxyscrape.com/?request=getproxies&proxytype=all&country=all&ssl=all&anonymity=all',

PROXYNOVA = 'https://www.proxynova.com/proxy-server-list/'

PROXYLIST_DOWNLOAD_HTTP = 'https://www.proxy-list.download/HTTP'

PROXYLIST_DOWNLOAD_HTTPS = 'https://www.proxy-list.download/HTTPS'

PROXYLIST_DOWNLOAD_SOCKS4 = 'https://www.proxy-list.download/SOCKS4'

PROXYLIST_DOWNLOAD_SOCKS5 = 'https://www.proxy-list.download/SOCKS5'

ALL = 'ALL'

These are all categories.

After you have to call a function named "getProxies"

# Get ALL Proxies According to your Choice

data = scrapper.getProxies()

the data will be returned by the above function the data is having the response data of function.

in data having proxies,len,category

- @proxies is the list of Proxy Class which has actual proxy.

- @len is the count of total proxies in @proxies.

- @category is the category of proxies defined above.

## You can handle the response data as below

# Print These Scrapped Proxies

print("Scrapped Proxies:")

for item in data.proxies:

print('{}:{}'.format(item.ip, item.port))

# Print the size of proxies scrapped

print("Total Proxies")

print(data.len)

# Print the Category of proxy from which you scrapped

print("Category of the Proxy")

print(data.category)

## Author

Sameer Narkhede

### Thanks for giving free proxies

- https://www.sslproxies.org/

- https://www.google-proxy.net/

- https://free-proxy-list.net/anonymous-proxy.html

- https://free-proxy-list.net/uk-proxy.html

- https://www.us-proxy.org/

- https://free-proxy-list.net/

- http://spys.me/proxy.txt

- https://proxyscrape.com/

- https://www.proxynova.com/proxy-server-list/

- https://www.proxy-list.download/

## Take a look here

## Donation

If this project help you reduce time to develop, you can give me a cup of coffee :relaxed:

[](https://paypal.me/sameernarkhede/250)

%package -n python3-Proxy-List-Scrapper

Summary: Proxy list scrapper from various websites. They gives the free proxies for temporary use.

Provides: python-Proxy-List-Scrapper

BuildRequires: python3-devel

BuildRequires: python3-setuptools

BuildRequires: python3-pip

%description -n python3-Proxy-List-Scrapper

# Proxy-List-Scrapper

#### [demo live example using javascript](https://narkhedesam.github.io/Proxy-List-Scrapper)

Proxy List Scrapper from various websites.

They gives the free proxies for temporary use.

### What is a proxy

A proxy is server that acts like a gateway or intermediary between any device and the rest of the internet. A proxy accepts and forwards connection requests, then returns data for those requests. This is the basic definition, which is quite limited, because there are dozens of unique proxy types with their own distinct configurations.

### What are the most popular types of proxies:

Residential proxies, Datacenter proxies, Anonymous proxies, Transparent proxies

### People use proxies to:

Avoid Geo-restrictions, Protect Privacy and Increase Security, Avoid Firewalls and Bans, Automate Online Processes, Use Multiple Accounts and Gather Data

#### Chrome Extension in here

you can download the chrome extension "Free Proxy List Scrapper Chrome Extension" folder and load in the extension.

##### Goto Chrome Extension click here.

## Web_Scrapper Module here

Web Scrapper is proxy web scraper using proxy rotating api https://scrape.do

you can check official documentation from here

You can send request to any webpages with proxy gateway & web api provided by scrape.do

## How to use Proxy List Scrapper

You can clone this project from github. or use

pip install Proxy-List-Scrapper

Make sure you have installed the requests and urllib3 in python

in import add

from Proxy_List_Scrapper import Scrapper, Proxy, ScrapperException

After that simply create an object of Scrapper class as "scrapper"

scrapper = Scrapper(category=Category, print_err_trace=False)

Here Your need to specify category defined as below:

SSL = 'https://www.sslproxies.org/',

GOOGLE = 'https://www.google-proxy.net/',

ANANY = 'https://free-proxy-list.net/anonymous-proxy.html',

UK = 'https://free-proxy-list.net/uk-proxy.html',

US = 'https://www.us-proxy.org/',

NEW = 'https://free-proxy-list.net/',

SPYS_ME = 'http://spys.me/proxy.txt',

PROXYSCRAPE = 'https://api.proxyscrape.com/?request=getproxies&proxytype=all&country=all&ssl=all&anonymity=all',

PROXYNOVA = 'https://www.proxynova.com/proxy-server-list/'

PROXYLIST_DOWNLOAD_HTTP = 'https://www.proxy-list.download/HTTP'

PROXYLIST_DOWNLOAD_HTTPS = 'https://www.proxy-list.download/HTTPS'

PROXYLIST_DOWNLOAD_SOCKS4 = 'https://www.proxy-list.download/SOCKS4'

PROXYLIST_DOWNLOAD_SOCKS5 = 'https://www.proxy-list.download/SOCKS5'

ALL = 'ALL'

These are all categories.

After you have to call a function named "getProxies"

# Get ALL Proxies According to your Choice

data = scrapper.getProxies()

the data will be returned by the above function the data is having the response data of function.

in data having proxies,len,category

- @proxies is the list of Proxy Class which has actual proxy.

- @len is the count of total proxies in @proxies.

- @category is the category of proxies defined above.

## You can handle the response data as below

# Print These Scrapped Proxies

print("Scrapped Proxies:")

for item in data.proxies:

print('{}:{}'.format(item.ip, item.port))

# Print the size of proxies scrapped

print("Total Proxies")

print(data.len)

# Print the Category of proxy from which you scrapped

print("Category of the Proxy")

print(data.category)

## Author

Sameer Narkhede

### Thanks for giving free proxies

- https://www.sslproxies.org/

- https://www.google-proxy.net/

- https://free-proxy-list.net/anonymous-proxy.html

- https://free-proxy-list.net/uk-proxy.html

- https://www.us-proxy.org/

- https://free-proxy-list.net/

- http://spys.me/proxy.txt

- https://proxyscrape.com/

- https://www.proxynova.com/proxy-server-list/

- https://www.proxy-list.download/

## Take a look here

## Donation

If this project help you reduce time to develop, you can give me a cup of coffee :relaxed:

[](https://paypal.me/sameernarkhede/250)

%package help

Summary: Development documents and examples for Proxy-List-Scrapper

Provides: python3-Proxy-List-Scrapper-doc

%description help

# Proxy-List-Scrapper

#### [demo live example using javascript](https://narkhedesam.github.io/Proxy-List-Scrapper)

Proxy List Scrapper from various websites.

They gives the free proxies for temporary use.

### What is a proxy

A proxy is server that acts like a gateway or intermediary between any device and the rest of the internet. A proxy accepts and forwards connection requests, then returns data for those requests. This is the basic definition, which is quite limited, because there are dozens of unique proxy types with their own distinct configurations.

### What are the most popular types of proxies:

Residential proxies, Datacenter proxies, Anonymous proxies, Transparent proxies

### People use proxies to:

Avoid Geo-restrictions, Protect Privacy and Increase Security, Avoid Firewalls and Bans, Automate Online Processes, Use Multiple Accounts and Gather Data

#### Chrome Extension in here

you can download the chrome extension "Free Proxy List Scrapper Chrome Extension" folder and load in the extension.

##### Goto Chrome Extension click here.

## Web_Scrapper Module here

Web Scrapper is proxy web scraper using proxy rotating api https://scrape.do

you can check official documentation from here

You can send request to any webpages with proxy gateway & web api provided by scrape.do

## How to use Proxy List Scrapper

You can clone this project from github. or use

pip install Proxy-List-Scrapper

Make sure you have installed the requests and urllib3 in python

in import add

from Proxy_List_Scrapper import Scrapper, Proxy, ScrapperException

After that simply create an object of Scrapper class as "scrapper"

scrapper = Scrapper(category=Category, print_err_trace=False)

Here Your need to specify category defined as below:

SSL = 'https://www.sslproxies.org/',

GOOGLE = 'https://www.google-proxy.net/',

ANANY = 'https://free-proxy-list.net/anonymous-proxy.html',

UK = 'https://free-proxy-list.net/uk-proxy.html',

US = 'https://www.us-proxy.org/',

NEW = 'https://free-proxy-list.net/',

SPYS_ME = 'http://spys.me/proxy.txt',

PROXYSCRAPE = 'https://api.proxyscrape.com/?request=getproxies&proxytype=all&country=all&ssl=all&anonymity=all',

PROXYNOVA = 'https://www.proxynova.com/proxy-server-list/'

PROXYLIST_DOWNLOAD_HTTP = 'https://www.proxy-list.download/HTTP'

PROXYLIST_DOWNLOAD_HTTPS = 'https://www.proxy-list.download/HTTPS'

PROXYLIST_DOWNLOAD_SOCKS4 = 'https://www.proxy-list.download/SOCKS4'

PROXYLIST_DOWNLOAD_SOCKS5 = 'https://www.proxy-list.download/SOCKS5'

ALL = 'ALL'

These are all categories.

After you have to call a function named "getProxies"

# Get ALL Proxies According to your Choice

data = scrapper.getProxies()

the data will be returned by the above function the data is having the response data of function.

in data having proxies,len,category

- @proxies is the list of Proxy Class which has actual proxy.

- @len is the count of total proxies in @proxies.

- @category is the category of proxies defined above.

## You can handle the response data as below

# Print These Scrapped Proxies

print("Scrapped Proxies:")

for item in data.proxies:

print('{}:{}'.format(item.ip, item.port))

# Print the size of proxies scrapped

print("Total Proxies")

print(data.len)

# Print the Category of proxy from which you scrapped

print("Category of the Proxy")

print(data.category)

## Author

Sameer Narkhede

### Thanks for giving free proxies

- https://www.sslproxies.org/

- https://www.google-proxy.net/

- https://free-proxy-list.net/anonymous-proxy.html

- https://free-proxy-list.net/uk-proxy.html

- https://www.us-proxy.org/

- https://free-proxy-list.net/

- http://spys.me/proxy.txt

- https://proxyscrape.com/

- https://www.proxynova.com/proxy-server-list/

- https://www.proxy-list.download/

## Take a look here

## Donation

If this project help you reduce time to develop, you can give me a cup of coffee :relaxed:

[](https://paypal.me/sameernarkhede/250)

%prep

%autosetup -n Proxy-List-Scrapper-0.2.2

%build

%py3_build

%install

%py3_install

install -d -m755 %{buildroot}/%{_pkgdocdir}

if [ -d doc ]; then cp -arf doc %{buildroot}/%{_pkgdocdir}; fi

if [ -d docs ]; then cp -arf docs %{buildroot}/%{_pkgdocdir}; fi

if [ -d example ]; then cp -arf example %{buildroot}/%{_pkgdocdir}; fi

if [ -d examples ]; then cp -arf examples %{buildroot}/%{_pkgdocdir}; fi

pushd %{buildroot}

if [ -d usr/lib ]; then

find usr/lib -type f -printf "/%h/%f\n" >> filelist.lst

fi

if [ -d usr/lib64 ]; then

find usr/lib64 -type f -printf "/%h/%f\n" >> filelist.lst

fi

if [ -d usr/bin ]; then

find usr/bin -type f -printf "/%h/%f\n" >> filelist.lst

fi

if [ -d usr/sbin ]; then

find usr/sbin -type f -printf "/%h/%f\n" >> filelist.lst

fi

touch doclist.lst

if [ -d usr/share/man ]; then

find usr/share/man -type f -printf "/%h/%f.gz\n" >> doclist.lst

fi

popd

mv %{buildroot}/filelist.lst .

mv %{buildroot}/doclist.lst .

%files -n python3-Proxy-List-Scrapper -f filelist.lst

%dir %{python3_sitelib}/*

%files help -f doclist.lst

%{_docdir}/*

%changelog

* Mon May 29 2023 Python_Bot - 0.2.2-1

- Package Spec generated