diff options

| author | CoprDistGit <infra@openeuler.org> | 2023-05-29 13:26:46 +0000 |

|---|---|---|

| committer | CoprDistGit <infra@openeuler.org> | 2023-05-29 13:26:46 +0000 |

| commit | ba313e2ac0c7c7c2d6c259ad75b3b32de16279e7 (patch) | |

| tree | c96072af39c40c55f404f3881d194fcc905556c9 | |

| parent | 69dffdaad84c3e599a107eed5134ea313a57bf28 (diff) | |

automatic import of python-ai-integration

| -rw-r--r-- | .gitignore | 1 | ||||

| -rw-r--r-- | python-ai-integration.spec | 749 | ||||

| -rw-r--r-- | sources | 1 |

3 files changed, 751 insertions, 0 deletions

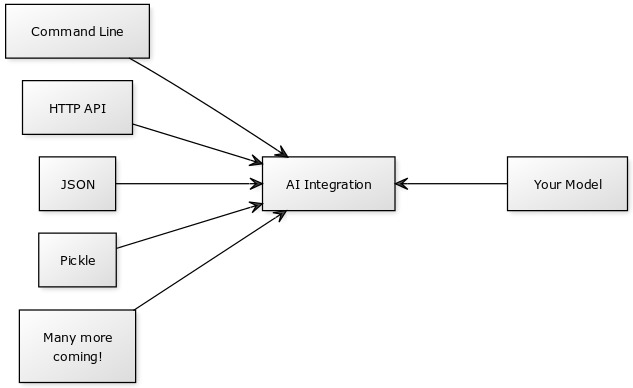

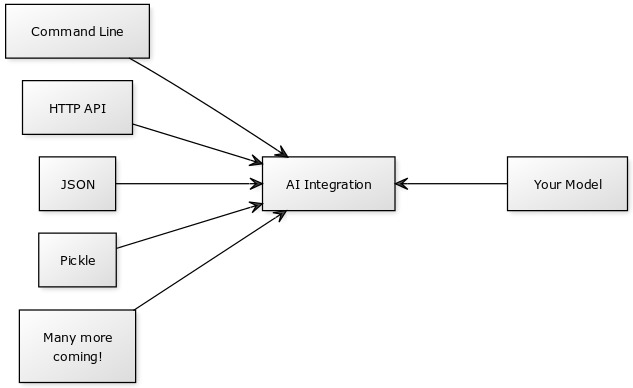

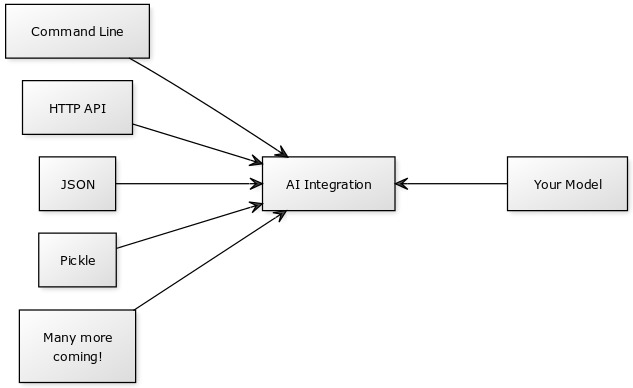

@@ -0,0 +1 @@ +/ai_integration-1.0.16.tar.gz diff --git a/python-ai-integration.spec b/python-ai-integration.spec new file mode 100644 index 0000000..7a3bb9d --- /dev/null +++ b/python-ai-integration.spec @@ -0,0 +1,749 @@ +%global _empty_manifest_terminate_build 0 +Name: python-ai-integration +Version: 1.0.16 +Release: 1 +Summary: AI Model Integration for python +License: Apache 2.0 +URL: https://github.com/deepai-org/ai_integration +Source0: https://mirrors.nju.edu.cn/pypi/web/packages/2e/a0/1a4f1a7bee6838afae38528b11e8ab8039b9355e6f01e580d501adc69045/ai_integration-1.0.16.tar.gz +BuildArch: noarch + +Requires: python3-Pillow +Requires: python3-Flask + +%description +# ai_integration +[](https://badge.fury.io/py/ai-integration) +AI Model Integration for Python 2.7/3 + +# Purpose +### Expose your AI model under a standard interface so that you can run the model under a variety of usage modes and hosting platforms - all working seamlessly, automatically, with no code changes. +### Designed to be as simple as possible to integrate. + +### Create a standard "ai_integration Docker Container Format" for interoperability. + + + + +## Table of Contents + +- [Purpose](#purpose) +- [Built-In Usage Modes](#built-in-usage-modes) +- [Example Models](#example-models) +- [How to call the integration library from your code](#how-to-call-the-integration-library-from-your-code) + * [Simplest Usage Example](#simplest-usage-example) +- [Docker Container Format Requirements](#docker-container-format-requirements) +- [Inputs Dicts](#inputs-dicts) +- [Result Dicts](#result-dicts) +- [Error Handling](#error-handling) +- [Inputs Schema](#inputs-schema) + + [Schema Data Types](#schema-data-types) + + [Schema Examples](#schema-examples) + * [Single Image](#single-image) + * [Multi-Image](#multi-image) + * [Text](#text) +- [Creating Usage Modes](#creating-usage-modes) + +# Built-In Usage Modes +There are several built-in modes for testing: + +* Command Line using argparse (command_line) +* HTTP Web UI / multipart POST API using Flask (http) +* Pipe inputs dict as JSON (test_inputs_dict_json) +* Pipe inputs dict as pickle (test_inputs_pickled_dict) +* Pipe single image for models that take a single input named image (test_single_image) +* Test single image models with a built-in solid gray image (test_model_integration) + +# Example Models +* [Tensorflow AdaIN Style Transfer](https://github.com/deepai-org/tf-adain-style-transfer) +* [Sentiment Analysis](https://github.com/deepai-org/sentiment-analysis) +* [Deep Dream](https://github.com/deepai-org/deepdream) +* [Open NSFW](https://github.com/deepai-org/open_nsfw) +* [Super Resolution](https://github.com/deepai-org/tf-super-resolution) +* [GPT-2 Text Generator](https://github.com/deepai-org/GPT2) +* [StyleGAN Face Generator](https://github.com/deepai-org/face-generator) +* [DeOldify Black-and-white Image Colorizer](https://github.com/deepai-org/DeOldify) + +# Contribution + +`ai_integration` is a community project developed under the free Apache 2.0 license. We welcome any new modes, integrations, bugfixes, and your ideas. + +# How to call the integration library from your code + +(An older version of this library required the user to expose their model as an inference function, but this caused pain in users and is no longer needed.) + +Run a "while True:" loop in your code and call "get_next_input" to get inputs. + +Pass an inputs_schema (see full docs below) to "get_next_input". + +See the specification below for "Inputs Dicts" + +"get_next_input" needs to be called using a "with" block as demonstrated below. + +Then process the data. Format the result or error as described under "Results Dicts" + +Then send the result (or error back) with "send_result". + +## Simplest Usage Example + +This example takes an image and returns a constant string without even looking at the input. It is a very bad AI algorithm for sure! + +```python +import ai_integration + +while True: + with ai_integration.get_next_input(inputs_schema={"image": {"type": "image"}}) as inputs_dict: + # If an exception happens in this 'with' block, it will be sent back to the ai_integration library + result_data = { + "content-type": 'text/plain', + "data": "Fake output", + "success": True + } + ai_integration.send_result(result_data) + + +``` + +# Docker Container Format Requirements: + +#### This library is intended to allow the creation of standardized docker containers. This is the standard: + +1. Use the ai_integration library + +2. You install this library with pip (or pip3) + +3. ENTRYPOINT is used to set your python code as the entry point into the container. + +4. No command line arguments will be passed to your python entrypoint. (Unless using the command line interface mode) + +5. Do not use argparse in your program as this will conflict with command line mode. + +To test your finished container's integration, run: + * nvidia-docker run --rm -it -e MODE=test_model_integration YOUR_DOCKER_IMAGE_NAME + * use docker instead of nvidia-docker if you aren't using NVIDIA... + * You should see a bunch of happy messages. Any sad messages or exceptions indicate an error. + * It will try inference a few times. If you don't see this happening, something is not integrated right. + + +# Inputs Dicts + +inputs_dict is a regular python dictionary. + +- Keys are input names (typically image, or style, content) +- Values are the data itself. Either byte array of JPEG data (for images) or text string. +- Any model options are also passed here and may be strings or numbers. Best to accept either strings/numbers in your model. + + + +# Result Dicts + +Content-type, a MIME type, inspired by HTTP, helps to inform the type of the "data" field + +success is a boolean. + +"error" should be the error message if success is False. + + +```python +{ + 'content-type': 'application/json', # or image/jpeg + 'data': "{JSON data or image data as byte buffer}", + 'success': True, + 'error': 'the error message (only if failed)' +} +``` + +# Error Handling + +If there's an error that you can catch: +- set content-type to text/plain +- set success to False +- set data to None +- set error to the best description of the error (perhaps the output of traceback.format_exc()) + +# Inputs Schema + +An inputs schema is a simple python dict {} that documents the inputs required by your inference function. + +Not every integration mode looks at the inputs schema - think of it as a hint for telling the mode what data it needs to provide your function. + +All mentioned inputs are assumed required by default. + +The keys are names, the values specify properties of the input. + +### Schema Data Types +- image +- text +- Suggest other types to add to the specification! + +### Schema Examples + +##### Single Image +By convention, name your input "image" if you accept a single image input +```python +{ + "image": { + "type": "image" + } +} +``` + +##### Multi-Image +For example, imagine a style transfer model that needs two input images. +```python +{ + "style": { + "type": "image" + }, + "content": { + "type": "image" + }, +} +``` + +##### Text +```python +{ + "sentence": { + "type": "text" + } +} +``` + +# Creating Usage Modes + +A mode is a function that lives in a file in the modes folder of this library. + + +To create a new mode: + +1. Add a python file in this folder +2. Add a python function to your file that takes two args: + + def http(inference_function=None, inputs_schema=None): +3. Attach a hint to your function +4. At the end of the file, declare the modes from your file (each python file could export multiple modes), for example: +```python +MODULE_MODES = { + 'http': http +} + +``` + +Your mode will be called with the inference function and inference schema, the rest is up to you! + +The sky is the limit, you can integrate with pretty much anything. + +See existing modes for examples. + + + + +%package -n python3-ai-integration +Summary: AI Model Integration for python +Provides: python-ai-integration +BuildRequires: python3-devel +BuildRequires: python3-setuptools +BuildRequires: python3-pip +%description -n python3-ai-integration +# ai_integration +[](https://badge.fury.io/py/ai-integration) +AI Model Integration for Python 2.7/3 + +# Purpose +### Expose your AI model under a standard interface so that you can run the model under a variety of usage modes and hosting platforms - all working seamlessly, automatically, with no code changes. +### Designed to be as simple as possible to integrate. + +### Create a standard "ai_integration Docker Container Format" for interoperability. + + + + +## Table of Contents + +- [Purpose](#purpose) +- [Built-In Usage Modes](#built-in-usage-modes) +- [Example Models](#example-models) +- [How to call the integration library from your code](#how-to-call-the-integration-library-from-your-code) + * [Simplest Usage Example](#simplest-usage-example) +- [Docker Container Format Requirements](#docker-container-format-requirements) +- [Inputs Dicts](#inputs-dicts) +- [Result Dicts](#result-dicts) +- [Error Handling](#error-handling) +- [Inputs Schema](#inputs-schema) + + [Schema Data Types](#schema-data-types) + + [Schema Examples](#schema-examples) + * [Single Image](#single-image) + * [Multi-Image](#multi-image) + * [Text](#text) +- [Creating Usage Modes](#creating-usage-modes) + +# Built-In Usage Modes +There are several built-in modes for testing: + +* Command Line using argparse (command_line) +* HTTP Web UI / multipart POST API using Flask (http) +* Pipe inputs dict as JSON (test_inputs_dict_json) +* Pipe inputs dict as pickle (test_inputs_pickled_dict) +* Pipe single image for models that take a single input named image (test_single_image) +* Test single image models with a built-in solid gray image (test_model_integration) + +# Example Models +* [Tensorflow AdaIN Style Transfer](https://github.com/deepai-org/tf-adain-style-transfer) +* [Sentiment Analysis](https://github.com/deepai-org/sentiment-analysis) +* [Deep Dream](https://github.com/deepai-org/deepdream) +* [Open NSFW](https://github.com/deepai-org/open_nsfw) +* [Super Resolution](https://github.com/deepai-org/tf-super-resolution) +* [GPT-2 Text Generator](https://github.com/deepai-org/GPT2) +* [StyleGAN Face Generator](https://github.com/deepai-org/face-generator) +* [DeOldify Black-and-white Image Colorizer](https://github.com/deepai-org/DeOldify) + +# Contribution + +`ai_integration` is a community project developed under the free Apache 2.0 license. We welcome any new modes, integrations, bugfixes, and your ideas. + +# How to call the integration library from your code + +(An older version of this library required the user to expose their model as an inference function, but this caused pain in users and is no longer needed.) + +Run a "while True:" loop in your code and call "get_next_input" to get inputs. + +Pass an inputs_schema (see full docs below) to "get_next_input". + +See the specification below for "Inputs Dicts" + +"get_next_input" needs to be called using a "with" block as demonstrated below. + +Then process the data. Format the result or error as described under "Results Dicts" + +Then send the result (or error back) with "send_result". + +## Simplest Usage Example + +This example takes an image and returns a constant string without even looking at the input. It is a very bad AI algorithm for sure! + +```python +import ai_integration + +while True: + with ai_integration.get_next_input(inputs_schema={"image": {"type": "image"}}) as inputs_dict: + # If an exception happens in this 'with' block, it will be sent back to the ai_integration library + result_data = { + "content-type": 'text/plain', + "data": "Fake output", + "success": True + } + ai_integration.send_result(result_data) + + +``` + +# Docker Container Format Requirements: + +#### This library is intended to allow the creation of standardized docker containers. This is the standard: + +1. Use the ai_integration library + +2. You install this library with pip (or pip3) + +3. ENTRYPOINT is used to set your python code as the entry point into the container. + +4. No command line arguments will be passed to your python entrypoint. (Unless using the command line interface mode) + +5. Do not use argparse in your program as this will conflict with command line mode. + +To test your finished container's integration, run: + * nvidia-docker run --rm -it -e MODE=test_model_integration YOUR_DOCKER_IMAGE_NAME + * use docker instead of nvidia-docker if you aren't using NVIDIA... + * You should see a bunch of happy messages. Any sad messages or exceptions indicate an error. + * It will try inference a few times. If you don't see this happening, something is not integrated right. + + +# Inputs Dicts + +inputs_dict is a regular python dictionary. + +- Keys are input names (typically image, or style, content) +- Values are the data itself. Either byte array of JPEG data (for images) or text string. +- Any model options are also passed here and may be strings or numbers. Best to accept either strings/numbers in your model. + + + +# Result Dicts + +Content-type, a MIME type, inspired by HTTP, helps to inform the type of the "data" field + +success is a boolean. + +"error" should be the error message if success is False. + + +```python +{ + 'content-type': 'application/json', # or image/jpeg + 'data': "{JSON data or image data as byte buffer}", + 'success': True, + 'error': 'the error message (only if failed)' +} +``` + +# Error Handling + +If there's an error that you can catch: +- set content-type to text/plain +- set success to False +- set data to None +- set error to the best description of the error (perhaps the output of traceback.format_exc()) + +# Inputs Schema + +An inputs schema is a simple python dict {} that documents the inputs required by your inference function. + +Not every integration mode looks at the inputs schema - think of it as a hint for telling the mode what data it needs to provide your function. + +All mentioned inputs are assumed required by default. + +The keys are names, the values specify properties of the input. + +### Schema Data Types +- image +- text +- Suggest other types to add to the specification! + +### Schema Examples + +##### Single Image +By convention, name your input "image" if you accept a single image input +```python +{ + "image": { + "type": "image" + } +} +``` + +##### Multi-Image +For example, imagine a style transfer model that needs two input images. +```python +{ + "style": { + "type": "image" + }, + "content": { + "type": "image" + }, +} +``` + +##### Text +```python +{ + "sentence": { + "type": "text" + } +} +``` + +# Creating Usage Modes + +A mode is a function that lives in a file in the modes folder of this library. + + +To create a new mode: + +1. Add a python file in this folder +2. Add a python function to your file that takes two args: + + def http(inference_function=None, inputs_schema=None): +3. Attach a hint to your function +4. At the end of the file, declare the modes from your file (each python file could export multiple modes), for example: +```python +MODULE_MODES = { + 'http': http +} + +``` + +Your mode will be called with the inference function and inference schema, the rest is up to you! + +The sky is the limit, you can integrate with pretty much anything. + +See existing modes for examples. + + + + +%package help +Summary: Development documents and examples for ai-integration +Provides: python3-ai-integration-doc +%description help +# ai_integration +[](https://badge.fury.io/py/ai-integration) +AI Model Integration for Python 2.7/3 + +# Purpose +### Expose your AI model under a standard interface so that you can run the model under a variety of usage modes and hosting platforms - all working seamlessly, automatically, with no code changes. +### Designed to be as simple as possible to integrate. + +### Create a standard "ai_integration Docker Container Format" for interoperability. + + + + +## Table of Contents + +- [Purpose](#purpose) +- [Built-In Usage Modes](#built-in-usage-modes) +- [Example Models](#example-models) +- [How to call the integration library from your code](#how-to-call-the-integration-library-from-your-code) + * [Simplest Usage Example](#simplest-usage-example) +- [Docker Container Format Requirements](#docker-container-format-requirements) +- [Inputs Dicts](#inputs-dicts) +- [Result Dicts](#result-dicts) +- [Error Handling](#error-handling) +- [Inputs Schema](#inputs-schema) + + [Schema Data Types](#schema-data-types) + + [Schema Examples](#schema-examples) + * [Single Image](#single-image) + * [Multi-Image](#multi-image) + * [Text](#text) +- [Creating Usage Modes](#creating-usage-modes) + +# Built-In Usage Modes +There are several built-in modes for testing: + +* Command Line using argparse (command_line) +* HTTP Web UI / multipart POST API using Flask (http) +* Pipe inputs dict as JSON (test_inputs_dict_json) +* Pipe inputs dict as pickle (test_inputs_pickled_dict) +* Pipe single image for models that take a single input named image (test_single_image) +* Test single image models with a built-in solid gray image (test_model_integration) + +# Example Models +* [Tensorflow AdaIN Style Transfer](https://github.com/deepai-org/tf-adain-style-transfer) +* [Sentiment Analysis](https://github.com/deepai-org/sentiment-analysis) +* [Deep Dream](https://github.com/deepai-org/deepdream) +* [Open NSFW](https://github.com/deepai-org/open_nsfw) +* [Super Resolution](https://github.com/deepai-org/tf-super-resolution) +* [GPT-2 Text Generator](https://github.com/deepai-org/GPT2) +* [StyleGAN Face Generator](https://github.com/deepai-org/face-generator) +* [DeOldify Black-and-white Image Colorizer](https://github.com/deepai-org/DeOldify) + +# Contribution + +`ai_integration` is a community project developed under the free Apache 2.0 license. We welcome any new modes, integrations, bugfixes, and your ideas. + +# How to call the integration library from your code + +(An older version of this library required the user to expose their model as an inference function, but this caused pain in users and is no longer needed.) + +Run a "while True:" loop in your code and call "get_next_input" to get inputs. + +Pass an inputs_schema (see full docs below) to "get_next_input". + +See the specification below for "Inputs Dicts" + +"get_next_input" needs to be called using a "with" block as demonstrated below. + +Then process the data. Format the result or error as described under "Results Dicts" + +Then send the result (or error back) with "send_result". + +## Simplest Usage Example + +This example takes an image and returns a constant string without even looking at the input. It is a very bad AI algorithm for sure! + +```python +import ai_integration + +while True: + with ai_integration.get_next_input(inputs_schema={"image": {"type": "image"}}) as inputs_dict: + # If an exception happens in this 'with' block, it will be sent back to the ai_integration library + result_data = { + "content-type": 'text/plain', + "data": "Fake output", + "success": True + } + ai_integration.send_result(result_data) + + +``` + +# Docker Container Format Requirements: + +#### This library is intended to allow the creation of standardized docker containers. This is the standard: + +1. Use the ai_integration library + +2. You install this library with pip (or pip3) + +3. ENTRYPOINT is used to set your python code as the entry point into the container. + +4. No command line arguments will be passed to your python entrypoint. (Unless using the command line interface mode) + +5. Do not use argparse in your program as this will conflict with command line mode. + +To test your finished container's integration, run: + * nvidia-docker run --rm -it -e MODE=test_model_integration YOUR_DOCKER_IMAGE_NAME + * use docker instead of nvidia-docker if you aren't using NVIDIA... + * You should see a bunch of happy messages. Any sad messages or exceptions indicate an error. + * It will try inference a few times. If you don't see this happening, something is not integrated right. + + +# Inputs Dicts + +inputs_dict is a regular python dictionary. + +- Keys are input names (typically image, or style, content) +- Values are the data itself. Either byte array of JPEG data (for images) or text string. +- Any model options are also passed here and may be strings or numbers. Best to accept either strings/numbers in your model. + + + +# Result Dicts + +Content-type, a MIME type, inspired by HTTP, helps to inform the type of the "data" field + +success is a boolean. + +"error" should be the error message if success is False. + + +```python +{ + 'content-type': 'application/json', # or image/jpeg + 'data': "{JSON data or image data as byte buffer}", + 'success': True, + 'error': 'the error message (only if failed)' +} +``` + +# Error Handling + +If there's an error that you can catch: +- set content-type to text/plain +- set success to False +- set data to None +- set error to the best description of the error (perhaps the output of traceback.format_exc()) + +# Inputs Schema + +An inputs schema is a simple python dict {} that documents the inputs required by your inference function. + +Not every integration mode looks at the inputs schema - think of it as a hint for telling the mode what data it needs to provide your function. + +All mentioned inputs are assumed required by default. + +The keys are names, the values specify properties of the input. + +### Schema Data Types +- image +- text +- Suggest other types to add to the specification! + +### Schema Examples + +##### Single Image +By convention, name your input "image" if you accept a single image input +```python +{ + "image": { + "type": "image" + } +} +``` + +##### Multi-Image +For example, imagine a style transfer model that needs two input images. +```python +{ + "style": { + "type": "image" + }, + "content": { + "type": "image" + }, +} +``` + +##### Text +```python +{ + "sentence": { + "type": "text" + } +} +``` + +# Creating Usage Modes + +A mode is a function that lives in a file in the modes folder of this library. + + +To create a new mode: + +1. Add a python file in this folder +2. Add a python function to your file that takes two args: + + def http(inference_function=None, inputs_schema=None): +3. Attach a hint to your function +4. At the end of the file, declare the modes from your file (each python file could export multiple modes), for example: +```python +MODULE_MODES = { + 'http': http +} + +``` + +Your mode will be called with the inference function and inference schema, the rest is up to you! + +The sky is the limit, you can integrate with pretty much anything. + +See existing modes for examples. + + + + +%prep +%autosetup -n ai-integration-1.0.16 + +%build +%py3_build + +%install +%py3_install +install -d -m755 %{buildroot}/%{_pkgdocdir} +if [ -d doc ]; then cp -arf doc %{buildroot}/%{_pkgdocdir}; fi +if [ -d docs ]; then cp -arf docs %{buildroot}/%{_pkgdocdir}; fi +if [ -d example ]; then cp -arf example %{buildroot}/%{_pkgdocdir}; fi +if [ -d examples ]; then cp -arf examples %{buildroot}/%{_pkgdocdir}; fi +pushd %{buildroot} +if [ -d usr/lib ]; then + find usr/lib -type f -printf "/%h/%f\n" >> filelist.lst +fi +if [ -d usr/lib64 ]; then + find usr/lib64 -type f -printf "/%h/%f\n" >> filelist.lst +fi +if [ -d usr/bin ]; then + find usr/bin -type f -printf "/%h/%f\n" >> filelist.lst +fi +if [ -d usr/sbin ]; then + find usr/sbin -type f -printf "/%h/%f\n" >> filelist.lst +fi +touch doclist.lst +if [ -d usr/share/man ]; then + find usr/share/man -type f -printf "/%h/%f.gz\n" >> doclist.lst +fi +popd +mv %{buildroot}/filelist.lst . +mv %{buildroot}/doclist.lst . + +%files -n python3-ai-integration -f filelist.lst +%dir %{python3_sitelib}/* + +%files help -f doclist.lst +%{_docdir}/* + +%changelog +* Mon May 29 2023 Python_Bot <Python_Bot@openeuler.org> - 1.0.16-1 +- Package Spec generated @@ -0,0 +1 @@ +9df0c4661c1b0526daaca3236f464ea7 ai_integration-1.0.16.tar.gz |