diff options

| author | CoprDistGit <infra@openeuler.org> | 2023-05-05 11:03:25 +0000 |

|---|---|---|

| committer | CoprDistGit <infra@openeuler.org> | 2023-05-05 11:03:25 +0000 |

| commit | 9c60114649d34bc23c533d0bd6c082cf4017b34d (patch) | |

| tree | 6c4efd637157bb6aaa8069343978dd3ac40c8e65 | |

| parent | f42b24ca03637888fd669655c63b8ff9e898f15b (diff) | |

automatic import of python-madgradopeneuler20.03

| -rw-r--r-- | .gitignore | 1 | ||||

| -rw-r--r-- | python-madgrad.spec | 228 | ||||

| -rw-r--r-- | sources | 1 |

3 files changed, 230 insertions, 0 deletions

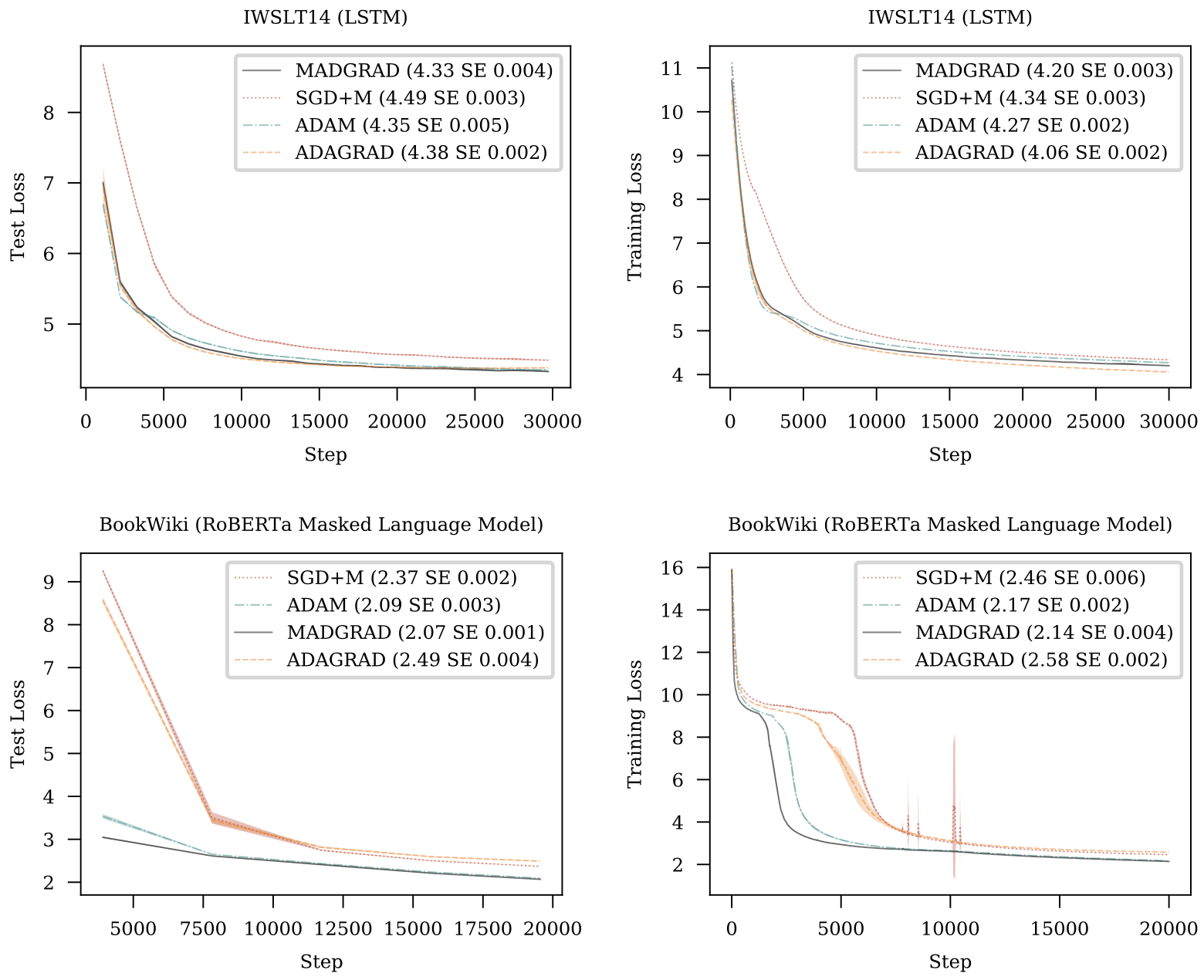

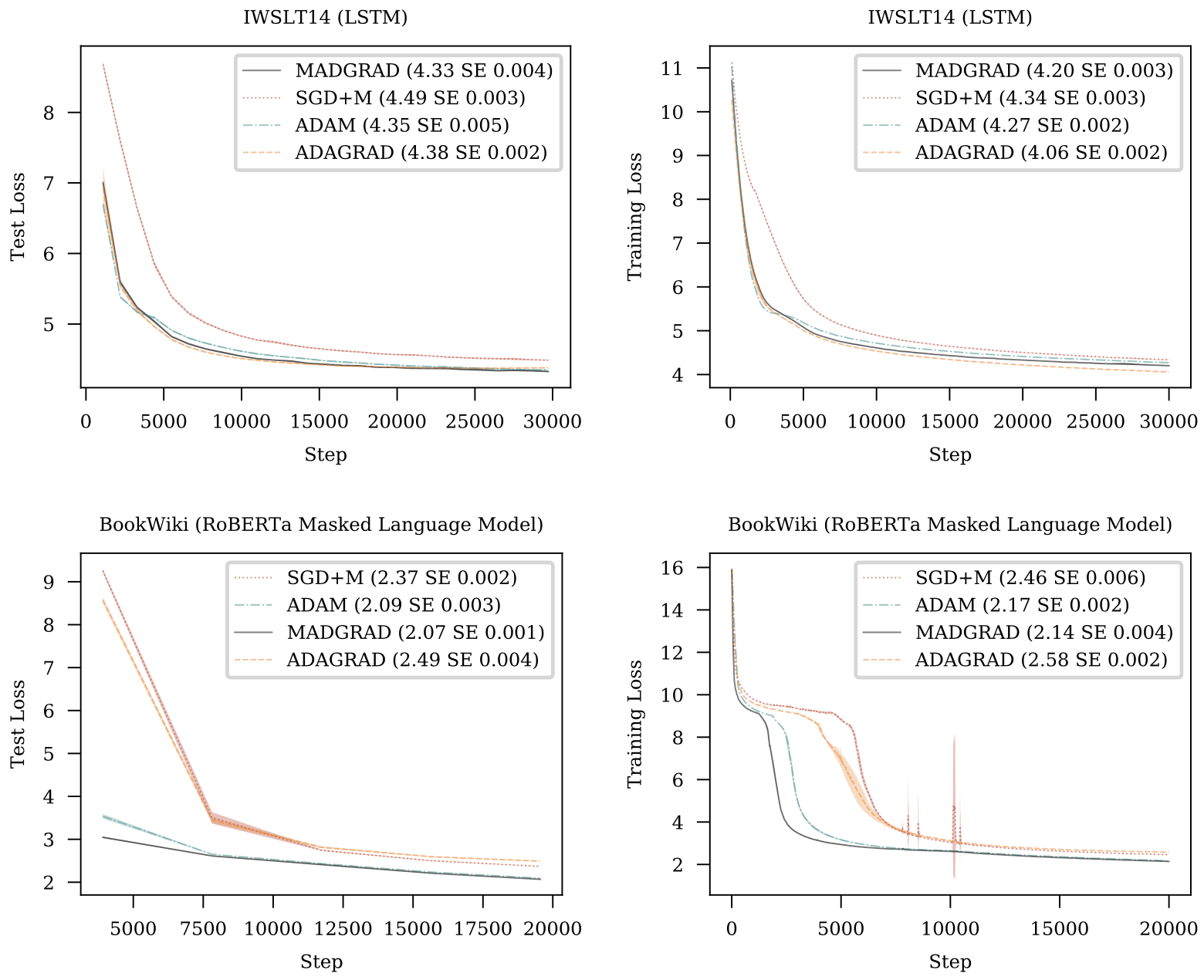

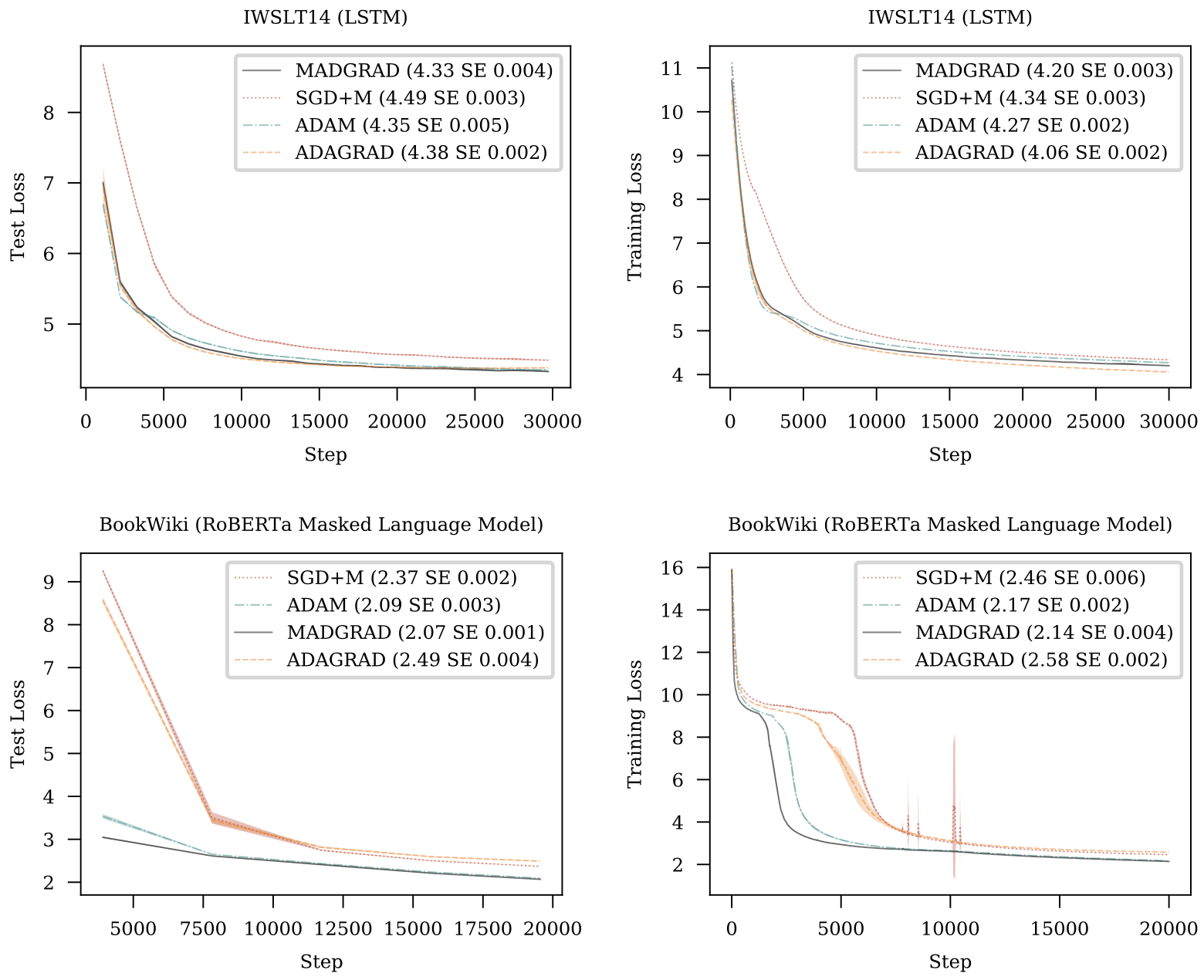

@@ -0,0 +1 @@ +/madgrad-1.3.tar.gz diff --git a/python-madgrad.spec b/python-madgrad.spec new file mode 100644 index 0000000..9ae1520 --- /dev/null +++ b/python-madgrad.spec @@ -0,0 +1,228 @@ +%global _empty_manifest_terminate_build 0 +Name: python-madgrad +Version: 1.3 +Release: 1 +Summary: A general purpose PyTorch Optimizer +License: MIT License +URL: https://github.com/facebookresearch/madgrad +Source0: https://mirrors.nju.edu.cn/pypi/web/packages/18/ea/95435c2c4d55f51375468dfd1721d88a73f93991b765c5a04366d36472f2/madgrad-1.3.tar.gz +BuildArch: noarch + + +%description + +# MADGRAD Optimization Method + +A Momentumized, Adaptive, Dual Averaged Gradient Method for Stochastic Optimization + +Documentation availiable at https://madgrad.readthedocs.io/en/latest/. + + +``` pip install madgrad ``` + +Try it out! A best-of-both-worlds optimizer with the generalization performance of SGD and at least as fast convergence as that of Adam, often faster. A drop-in torch.optim implementation `madgrad.MADGRAD` is provided, as well as a FairSeq wrapped instance. For FairSeq, just import madgrad anywhere in your project files and use the `--optimizer madgrad` command line option, together with `--weight-decay`, `--momentum`, and optionally `--madgrad_eps`. + +The madgrad.py file containing the optimizer can be directly dropped into any PyTorch project if you don't want to install via pip. If you are using fairseq, you need the acompanying fairseq_madgrad.py file as well. + +## Things to note: + - You may need to use a lower weight decay than you are accustomed to. Often 0. + - You should do a full learning rate sweep as the optimal learning rate will be different from SGD or Adam. Best LR values we found were 2.5e-4 for 152 layer PreActResNet on CIFAR10, 0.001 for ResNet-50 on ImageNet, 0.025 for IWSLT14 using `transformer_iwslt_de_en` and 0.005 for RoBERTa training on BookWiki using `BERT_BASE`. On NLP models gradient clipping also helped. + +# Mirror MADGRAD +The mirror descent version of MADGRAD is also included as `madgrad.MirrorMADGRAD`. This version works extremely well, even better than MADGRAD, on large-scale transformer training. This version is recommended for any problem where the datasets are big enough that generalization gap is not an issue. + +As the mirror descent version does not implicitly regularize, you can usually use weight +decay values that work well with other optimizers. + +# Tech Report + +[Adaptivity without Compromise: A Momentumized, Adaptive, Dual Averaged Gradient Method for Stochastic Optimization](https://arxiv.org/abs/2101.11075) + +We introduce MADGRAD, a novel optimization method in the family of AdaGrad adaptive gradient methods. MADGRAD shows excellent performance on deep learning optimization problems from multiple fields, including classification and image-to-image tasks in vision, and recurrent and bidirectionally-masked models in natural language processing. For each of these tasks, MADGRAD matches or outperforms both SGD and ADAM in test set performance, even on problems for which adaptive methods normally perform poorly. + + +```BibTeX +@misc{defazio2021adaptivity, + title={Adaptivity without Compromise: A Momentumized, Adaptive, Dual Averaged Gradient Method for Stochastic Optimization}, + author={Aaron Defazio and Samy Jelassi}, + year={2021}, + eprint={2101.11075}, + archivePrefix={arXiv}, + primaryClass={cs.LG} +} +``` + +# Results + + + + +# License + +MADGRAD is licensed under the [MIT License](LICENSE). + + + + +%package -n python3-madgrad +Summary: A general purpose PyTorch Optimizer +Provides: python-madgrad +BuildRequires: python3-devel +BuildRequires: python3-setuptools +BuildRequires: python3-pip +%description -n python3-madgrad + +# MADGRAD Optimization Method + +A Momentumized, Adaptive, Dual Averaged Gradient Method for Stochastic Optimization + +Documentation availiable at https://madgrad.readthedocs.io/en/latest/. + + +``` pip install madgrad ``` + +Try it out! A best-of-both-worlds optimizer with the generalization performance of SGD and at least as fast convergence as that of Adam, often faster. A drop-in torch.optim implementation `madgrad.MADGRAD` is provided, as well as a FairSeq wrapped instance. For FairSeq, just import madgrad anywhere in your project files and use the `--optimizer madgrad` command line option, together with `--weight-decay`, `--momentum`, and optionally `--madgrad_eps`. + +The madgrad.py file containing the optimizer can be directly dropped into any PyTorch project if you don't want to install via pip. If you are using fairseq, you need the acompanying fairseq_madgrad.py file as well. + +## Things to note: + - You may need to use a lower weight decay than you are accustomed to. Often 0. + - You should do a full learning rate sweep as the optimal learning rate will be different from SGD or Adam. Best LR values we found were 2.5e-4 for 152 layer PreActResNet on CIFAR10, 0.001 for ResNet-50 on ImageNet, 0.025 for IWSLT14 using `transformer_iwslt_de_en` and 0.005 for RoBERTa training on BookWiki using `BERT_BASE`. On NLP models gradient clipping also helped. + +# Mirror MADGRAD +The mirror descent version of MADGRAD is also included as `madgrad.MirrorMADGRAD`. This version works extremely well, even better than MADGRAD, on large-scale transformer training. This version is recommended for any problem where the datasets are big enough that generalization gap is not an issue. + +As the mirror descent version does not implicitly regularize, you can usually use weight +decay values that work well with other optimizers. + +# Tech Report + +[Adaptivity without Compromise: A Momentumized, Adaptive, Dual Averaged Gradient Method for Stochastic Optimization](https://arxiv.org/abs/2101.11075) + +We introduce MADGRAD, a novel optimization method in the family of AdaGrad adaptive gradient methods. MADGRAD shows excellent performance on deep learning optimization problems from multiple fields, including classification and image-to-image tasks in vision, and recurrent and bidirectionally-masked models in natural language processing. For each of these tasks, MADGRAD matches or outperforms both SGD and ADAM in test set performance, even on problems for which adaptive methods normally perform poorly. + + +```BibTeX +@misc{defazio2021adaptivity, + title={Adaptivity without Compromise: A Momentumized, Adaptive, Dual Averaged Gradient Method for Stochastic Optimization}, + author={Aaron Defazio and Samy Jelassi}, + year={2021}, + eprint={2101.11075}, + archivePrefix={arXiv}, + primaryClass={cs.LG} +} +``` + +# Results + + + + +# License + +MADGRAD is licensed under the [MIT License](LICENSE). + + + + +%package help +Summary: Development documents and examples for madgrad +Provides: python3-madgrad-doc +%description help + +# MADGRAD Optimization Method + +A Momentumized, Adaptive, Dual Averaged Gradient Method for Stochastic Optimization + +Documentation availiable at https://madgrad.readthedocs.io/en/latest/. + + +``` pip install madgrad ``` + +Try it out! A best-of-both-worlds optimizer with the generalization performance of SGD and at least as fast convergence as that of Adam, often faster. A drop-in torch.optim implementation `madgrad.MADGRAD` is provided, as well as a FairSeq wrapped instance. For FairSeq, just import madgrad anywhere in your project files and use the `--optimizer madgrad` command line option, together with `--weight-decay`, `--momentum`, and optionally `--madgrad_eps`. + +The madgrad.py file containing the optimizer can be directly dropped into any PyTorch project if you don't want to install via pip. If you are using fairseq, you need the acompanying fairseq_madgrad.py file as well. + +## Things to note: + - You may need to use a lower weight decay than you are accustomed to. Often 0. + - You should do a full learning rate sweep as the optimal learning rate will be different from SGD or Adam. Best LR values we found were 2.5e-4 for 152 layer PreActResNet on CIFAR10, 0.001 for ResNet-50 on ImageNet, 0.025 for IWSLT14 using `transformer_iwslt_de_en` and 0.005 for RoBERTa training on BookWiki using `BERT_BASE`. On NLP models gradient clipping also helped. + +# Mirror MADGRAD +The mirror descent version of MADGRAD is also included as `madgrad.MirrorMADGRAD`. This version works extremely well, even better than MADGRAD, on large-scale transformer training. This version is recommended for any problem where the datasets are big enough that generalization gap is not an issue. + +As the mirror descent version does not implicitly regularize, you can usually use weight +decay values that work well with other optimizers. + +# Tech Report + +[Adaptivity without Compromise: A Momentumized, Adaptive, Dual Averaged Gradient Method for Stochastic Optimization](https://arxiv.org/abs/2101.11075) + +We introduce MADGRAD, a novel optimization method in the family of AdaGrad adaptive gradient methods. MADGRAD shows excellent performance on deep learning optimization problems from multiple fields, including classification and image-to-image tasks in vision, and recurrent and bidirectionally-masked models in natural language processing. For each of these tasks, MADGRAD matches or outperforms both SGD and ADAM in test set performance, even on problems for which adaptive methods normally perform poorly. + + +```BibTeX +@misc{defazio2021adaptivity, + title={Adaptivity without Compromise: A Momentumized, Adaptive, Dual Averaged Gradient Method for Stochastic Optimization}, + author={Aaron Defazio and Samy Jelassi}, + year={2021}, + eprint={2101.11075}, + archivePrefix={arXiv}, + primaryClass={cs.LG} +} +``` + +# Results + + + + +# License + +MADGRAD is licensed under the [MIT License](LICENSE). + + + + +%prep +%autosetup -n madgrad-1.3 + +%build +%py3_build + +%install +%py3_install +install -d -m755 %{buildroot}/%{_pkgdocdir} +if [ -d doc ]; then cp -arf doc %{buildroot}/%{_pkgdocdir}; fi +if [ -d docs ]; then cp -arf docs %{buildroot}/%{_pkgdocdir}; fi +if [ -d example ]; then cp -arf example %{buildroot}/%{_pkgdocdir}; fi +if [ -d examples ]; then cp -arf examples %{buildroot}/%{_pkgdocdir}; fi +pushd %{buildroot} +if [ -d usr/lib ]; then + find usr/lib -type f -printf "/%h/%f\n" >> filelist.lst +fi +if [ -d usr/lib64 ]; then + find usr/lib64 -type f -printf "/%h/%f\n" >> filelist.lst +fi +if [ -d usr/bin ]; then + find usr/bin -type f -printf "/%h/%f\n" >> filelist.lst +fi +if [ -d usr/sbin ]; then + find usr/sbin -type f -printf "/%h/%f\n" >> filelist.lst +fi +touch doclist.lst +if [ -d usr/share/man ]; then + find usr/share/man -type f -printf "/%h/%f.gz\n" >> doclist.lst +fi +popd +mv %{buildroot}/filelist.lst . +mv %{buildroot}/doclist.lst . + +%files -n python3-madgrad -f filelist.lst +%dir %{python3_sitelib}/* + +%files help -f doclist.lst +%{_docdir}/* + +%changelog +* Fri May 05 2023 Python_Bot <Python_Bot@openeuler.org> - 1.3-1 +- Package Spec generated @@ -0,0 +1 @@ +a346d364aa0cadea5e53dd6cfc37615e madgrad-1.3.tar.gz |